The term big data is so common now at the time of this posting in 2015 that it deserves some mention in the data warehouse space, but where did it come from, and where should it fit in your technology infrastructure?

The personal computer and birth of networking via Local Area Networks (LAN) have allowed corporate enterprises to sprout up and its transaction data to dominated the early market. Enterprises and their transactional ERP systems or custom relational applications gave rise to relational data marts and data warehouses that dominated the 1990’s and 2000’s

2007-2008 brought the era of web 2.0 – this is significant since before the static web-only shared information on a one-way basis pushing info toward the user. Users typically could not share their responses or information back on the websites but only via forms. Web 2.0 gave birth to technology for social media and companies like Facebook and myspace. User information was now rampant and growing exponentially on the web for everyone to digest. This looks very similar to enterprise data, but there were some differences.

The first is the variety of the data types, which was much more varied, like structured/unstructured text, video, and photos. Secondly, the volume of data was much more since millions of users registered to these systems compared to thousands in an enterprise, and usage was much higher. The velocity at which the data is being captured could no longer be satisfied by traditional relational databases.

Let see why the traditional ways to process these large volumes of data overpowered our current systems. We have data on the disc, Memory, aka RAM, and CPU to understand data. As the data got bigger, the first approach is to scale the hardware vertically to get more RAM and CPU power. This solution quickly hit a wall because we have physical limitations on how powerful we can make one computer.

Google’s paper on GFS and using a concept called map/reduce was a stab to tackle the new growing issue with the current limitations. The basis was to use commodity hardware and scale outward instead of upward and take the data to the computing power instead of pushing all the data to a common CPU and RAM. The map-reduce process would then distribute and aggregate the results.

This spawned the thinking to get this revolution sparked to capture, store and process these volumes of data. These 3 main factors of variety, volume, and velocity and this new way of thinking culminated in BIG DATA. This phenomenon now demanded a plethora of new technologies to guide this new approach and its needs.

This was the driver that gave birth to Hadoop (based on Hadoop Distributed File System aka HDFS/map/sort and shuffle then reduce) by team yahoo and, in turn, a variety of NoSQL Databases. These databases fall into 4 main categories depending on how the data is stored, and these are as follows:

- Graph

- Document

- Key-Value Pairs

- Column Store

BIG DATA TOOLS

Here is some of the ecosystem of new technology to manage these big data demands.

STORE AND READ

Hadoop – an open-source implementation of this storage system using HDFS and map/reduce for processing

Hive – SQL-like query for Hadoop since it is, in fact, a NoSQL database. this allows us to capitalize on workforce skillsets

Apache Drill is like google Dremel/Dremel query language (DrQL), which powers google big data. It is the front end to query, plan, execute and store data. It allows nested document queries which are quite powerful. Its strength is getting to nested data.

Spark looks like Hadoop architecture of cluster manager with worker nodes but with the added advantage of in-memory processing. Spark can be viewed as the next generation of Big Data tools as the spark exceeds Hadoop’s benchmarks using fewer machines and CPU processing power.

Shark is to Spark what Hive is to Hadoop. Instead of map/reduce to interface with HDFS, the fundamental difference is the Spark execution engine.

Presto from Facebook is similar to the Hadoop architecture sitting on HDFS, and they all allow real-time querying of BIG DATA.

PROCESS using streams: you may want to look at my thoughts on stream processing, i.e., continuous and as soon as it enters the system. This is very different from the typical polling techniques that are traditionally used by many application architectures.

Apache Storm, owned by Twitter– Nimbus/ Zookeeper/Supervisor, is the same 3 level architecture to manage worker nodes. The key concepts are Tuples – ordered list of elements, Streams are an unbounded sequence of tuples, Spout are sources of streams in a computation. Bolts process input streams and produce output streams. They can run functions: filters: aggregate or join data or talk to databases. Typologies are the overall calculation representing visually as a network of spouts and bolts.

How can you use BIG Data in your organization?

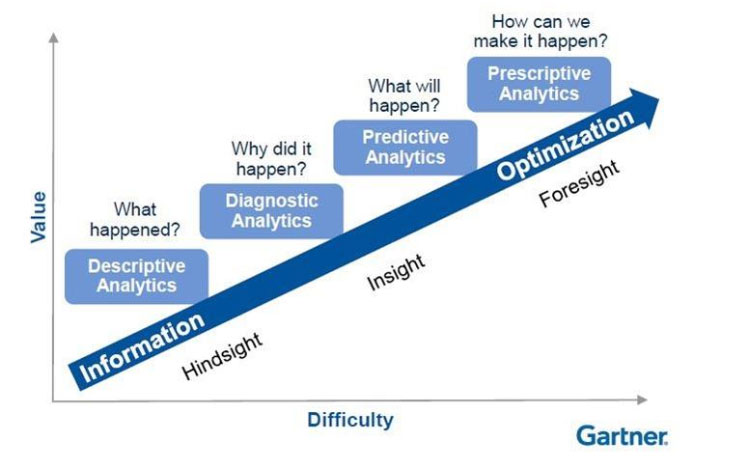

The data owner must merge user interactions, demographic, geographic, psycho-graphic historical buying info, and behavioral profile information to make powerful insight and customer segments. The utopian dream of the business intelligence world is to reach a segment of 1, but in the meantime, we continue to make smaller and smaller segments to make offerings more custom. Sentimental analysis is then added over these growing and vast merged data sets. These transformed sets make this augmented data very powerful, but there is also the challenge to navigate the data and find the patterns. Clearly, the main benefit of this organized data is TARGETING. We can quickly target the perfect customer and make a compelling strategy that will be impossible to ignore. Check out my article on “why invest in predictive models” for more on this. The main challenge has been putting the unstructured data and structured ERP data together, especially in real-time. It remains the challenge of this 2010 decade and beyond.

- Determine how much data you have, how many different data sources, and the grain and content that can be merged?

- What new insight patterns can be gained by putting this data together?

- Are there predictive models that you would like to calculate over this big data?

- pick a NoSQL database that matches your needs

- Set up the infrastructure

- Merge all your data sets

- Develop case studies of how you want to segment your customer profiles

- Pull the matching qualifying rows

- Create actionable strategies on these newly targeted data points

Case Study: Here is an example of how Abercrombie (A&F) makes me a loyal buyer

-

- User interactions: A&F send daily email campaigns: read emails and click links let them know my interests.

- Visit the A&F website: time spent on site, entry pages, navigated path/pages, exit page, sales funnel drop off, they study my navigation patterns, and it warms me to new products and allows me to know what a bargain price is.

- Demographic: they have my demographic info

- my Age

- gender

- marital status

- possible occupation?

- so they can derive my buying power

- Geographic: they know where I buy

- Region: North East, State

- zip code: city dweller

- Psycho-graphic buying history based on past SKU I bought: edgy youthful

- they have an idea of my style: trendy

- based on scraping social network sites: High social profile

- The behavioral profile they know my brand loyalty with A&F: buys this brand consistently

- price point: buys heavy discounts or clearance

Strategies Prescribed

- Raise awareness of new products in my wheelhouse, same great brand but new fit and style

- adopt edgy, youthful styles in my price range

- buy TV time in the NE cities

- Drive it on social media

- Drive direct contact via emails

//thoughts to develop

Floom to listen > Amazon with Storm topology for processing > stored on Amazon S3

Esper – query like

Spark Streaming

Apache S4