In today’s data-driven world, the evolution of data architecture and engineering is not just a technical upgrade—it’s a strategic necessity. Modern enterprises must transform raw data into intelligent insights rapidly and at scale. Let’s explore the core pillars of this transformation and why they matter.

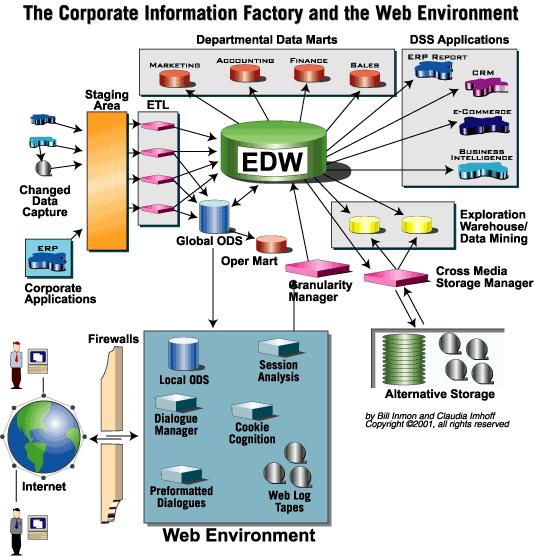

From ER Models to Dimensional Models: A Performance-Driven Shift

Entity-Relationship (ER) models were the backbone of transactional systems for decades. However, ER models fall short in performance and usability when it comes to analytics. Enter dimensional modeling, the foundation of effective data warehouses. With clear facts and dimensions, this approach simplifies complex queries and speeds up report generation. It’s not just about modeling data—it’s about making it accessible for decision-makers.

Star Schemas & Coverage Tables: Designing for Sparse & Scalable Analysis

Modern data is often sparse and high-dimensional. This means that traditional flat-table designs or normalized models aren’t efficient. Star schemas with centralized fact tables and linked dimension tables are the answer. They:

- Reduce query complexity

- Enable parallel processing

- Support multi-dimensional analysis

Coverage tables, meanwhile, help track completeness, which is especially important when analyzing partial datasets across geographies, products, or timelines. They’re critical when “what’s missing” is just as important as “what’s there.”

The Shift: From “Get Data In” to “Get Data Out”

Historically, data warehouses focused on ingestion, loading everything. But modern demands have shifted the focus to delivery. It’s about:

- Speed to insights

- Low-latency reporting

- Self-service analytics

In other words, data warehouses must now serve as engines of output, not just archives of input.

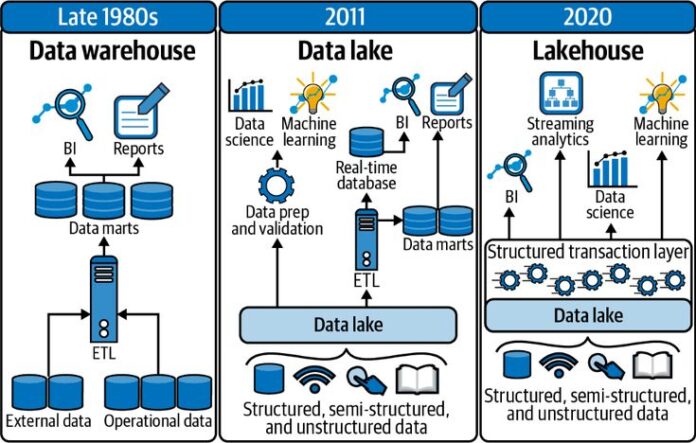

ETL and MPP: Scaling the Backbone of Data

Extract, Transform, Load (ETL) remains essential, but now it’s more dynamic, with ELT, streaming, and CDC (Change Data Capture) variations. Pair that with Massively Parallel Processing (MPP) engines, and you have the horsepower to process petabytes of data easily, especially doing Extract, Load, Transform (ELT)

Technologies like Spark/Databricks, Snowflake, and BigQuery exemplify this shift. Each is built to scale and distribute workloads efficiently across multiple nodes.

Understanding the Layers: From Metadata to Unstructured Data

A truly modern architecture respects the layered nature of data:

- Metadata Layer – Governs meaning, lineage, and structure

- Master Data – Core business entities like Customer, Product, Location

- Operational Data – Real-time or near-real-time data from systems of record

- Analytical Data – Cleaned, transformed, and structured for decision-making

- Unstructured Data – Logs, documents, media, and text—often untapped, yet rich in insights

This layered approach helps isolate complexity, support different access patterns, and enforce governance across systems.

💡 Final Thoughts

Modern data architecture is no longer about building pipelines; it’s about engineering ecosystems where data flows seamlessly from ingestion to insight. It’s about designing for speed and scale, for humans and machines.

To win in the modern economy, organizations must think not only in rows and columns but in models, stories, and strategies.

Data is not just an asset; it’s the foundation of modern intelligence.