Ensemble methods are machine learning techniques that combine the predictions of multiple individual models (often called “base models” or “weak learners”) to create a more robust and accurate prediction. The idea behind ensemble methods is to leverage the strengths of multiple models to compensate for the weaknesses of individual models. Ensemble methods are widely used in artificial intelligence and machine learning for improving predictive performance, reducing overfitting, and enhancing model generalization. Some common ensemble methods include:

- Bagging (Bootstrap Aggregating):

- In bagging, multiple base models are trained independently on random subsets (with replacement) of the training data. This process reduces the variance of the model by averaging the predictions of individual models.

- The most well-known algorithm using bagging is Random Forest, which combines multiple decision trees.

- Boosting:

- Boosting is an ensemble technique that combines multiple models sequentially. It assigns higher weights to the instances that are misclassified by the previous models, focusing on the more challenging examples.

- Popular boosting algorithms include AdaBoost, Gradient Boosting, and XGBoost.

- Stacking:

- Stacking, also known as Stacked Generalization, involves training multiple base models and then training a meta-model on top of the base models’ predictions.

- The meta-model is responsible for combining the predictions of the base models, effectively learning how to best weigh their contributions.

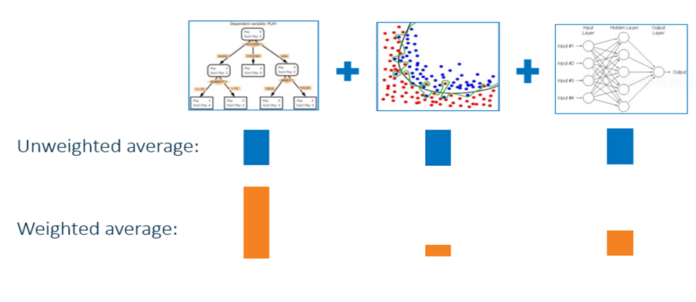

- Voting:

- Voting ensembles combine the predictions of multiple base models by taking a majority vote (for classification) or averaging (for regression) to make the final prediction.

- Common types of voting ensembles include Hard Voting (majority vote) and Soft Voting (weighted average).

- Blending:

- Blending is similar to stacking but is a simpler form of meta-ensemble. Instead of using a separate dataset for the meta-model, blending often involves dividing the training data into two parts: one for training the base models and the other for training the meta-model.

- Bootstrapped Ensembles:

- These ensembles generate multiple subsets of the training data, train models on each subset, and then combine their predictions.

- Examples include Bagged Decision Trees and Random Forests.

Ensemble methods are effective because they reduce bias and variance, improve model robustness, and enhance the overall predictive power. By combining different models, ensemble methods are capable of capturing complex relationships in the data and reducing the risk of making poor predictions due to the limitations of a single model. The choice of which ensemble method to use depends on the specific problem, the characteristics of the data, and the trade-offs between model complexity and interpretability.