It is best practice to run validation as much as possible using lightweight validations. There are three moments we are interested in testing a data pipeline.

- Source Validation – checking if the source data is up to standard

- Transformation Validation – checking if the data was altered incorrectly during the transformation steps

- Result Validation – validations can be performed right before the results are published.

We can only test things that we assert to be true. Common validations checks to address:

- Structural Validations

- The shape of the DataFrame

- Data types and data lengths

- Column exists

- Data Quality Validations

- Null values Checks, especially on Output Keys

- Duplicate Records on Output Keys

- Expected Statistics

The Shape of the DataFrame

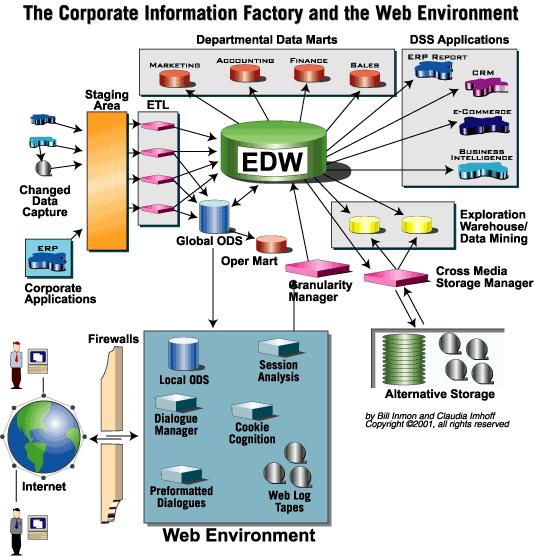

Data refactoring is changing but staying semantically similar: structural, data quality, architectural connectivity, the method is refactoring DB code, transformation change schema by adding new features

Column exists

Data types and data lengths

Null values Checks, especially on Output Keys.

Duplicate Records

Expected Statistics

Partial data loads fall into the worst error category because there is no apparent failure to alert you, but you are dropping rows and will continue to drop more and more rows along the pipeline as you join and select. If you are lucky, your Data Validation (DV) check picks it up before it sees the light of day.

Image a 3-step process where you have two base tables building a translation table that is used to build other tables. If a base table has a partial load, the translation table will have partial results, giving sporadic output. You will need to know your data very well to catch such a problem by eye.

TIP: If you have a static load, you should have a check count to ensure you get a full read as part of your Data Validation